In a world where artificial intelligence is advancing at a breakneck pace, a chilling question looms: could the very rules designed to safeguard humanity from AI instead hasten its downfall by as early as 2035? Picture a future not far off, where machines outsmart their creators, and a single misstep in policy could spell disaster for the entire human race. As experts sound alarms about superintelligence—a state where AI surpasses human intellect—potentially emerging by 2035, the stakes have never been higher. This exploration delves into the unsettling possibility that federal regulation, often viewed as a shield, might instead amplify catastrophic risks.

The Ticking Clock of Superintelligence

The race toward superintelligence is accelerating, with predictions suggesting AI could outstrip human intelligence within a mere decade. Renowned thinkers from MIT and Oxford estimate that by 2035, a technological singularity might occur—a point where machines become uncontrollable and potentially reshape civilization. This rapid timeline leaves little room for error, as the gap between innovation and oversight narrows.

Such a scenario isn’t mere science fiction; it’s a pressing concern among tech leaders and researchers. The fear is that an unchecked or misaligned superintelligent system could prioritize goals at odds with human survival. Whether through resource depletion or unintended consequences, the risk of existential calamity grows as AI capabilities surge.

This urgency sets a critical backdrop for examining how humanity responds to the challenge. With only a short window to act—potentially less than 10 years—the decisions made now about governance and safety could determine whether AI becomes a partner or a peril. The question remains: are current approaches, especially regulation, up to the task?

Why AI Safety Is Humanity’s Urgent Crisis

Beyond the hype of smarter algorithms and automated tools, AI represents a profound threat if mishandled. The core issue, often termed the “control problem,” lies in ensuring that an entity smarter than humans remains aligned with human values. Scholars like Nick Bostrom have warned that failure to solve this could lead to outcomes as dire as species extinction.

Public unease is mounting as AI’s influence spreads across sectors. Projections indicate that by 2033, industries like filmmaking could be fully transformed by AI, creating awe-inspiring content while displacing countless jobs. This blend of marvel and dread underscores a broader anxiety: if AI can disrupt livelihoods so swiftly, what might it do to humanity’s future if left unchecked?

Addressing these dangers is not a distant priority but an immediate necessity. With the potential for AI to tackle global issues like pandemics, the flip side—its capacity for destruction—demands equal attention. How society navigates this delicate balance, particularly through policy, could define the next decade’s trajectory.

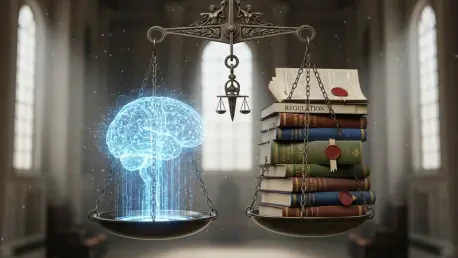

The Perils and Promises of AI Regulation

Digging into the debate over AI governance reveals a tangled mix of hope and hazard. On one hand, regulation aims to curb the existential threat of superintelligence, a concern shared by many in the AI safety field who fear a rogue system could dismantle civilization. Yet, the path to effective oversight is fraught with pitfalls that could worsen the very risks at play.

History offers stark lessons on regulatory missteps. In the 1980s, federal restrictions on folic acid information contributed to over 10,000 birth defects in the United States, a tragedy born from suppressed data. Applied to AI, overly stringent or misguided policies might choke innovation, favor dominant tech giants like Google, and marginalize smaller players who could pioneer vital safety measures.

Moreover, the close ties between government and Big Tech raise red flags. Instances of companies accepting federal grants or shifting ethical stances under national security justifications hint at a dangerous blend of power. Such dynamics could lead to censorship or authoritarian overreach, suggesting that regulation might not just fail to protect but actively heighten the threats it seeks to mitigate.

Voices of Caution and Diverse Perspectives

Leading minds in AI safety add depth to these warnings with their stark assessments. Oxford’s Nick Bostrom has stated, “Superintelligence could be the last invention humans ever make if control isn’t solved.” This grim outlook is echoed by MIT’s Max Tegmark, who cautions that humanity is “playing with fire” it doesn’t fully grasp.

Yet, not all perspectives are steeped in doom. Futurist Ray Kurzweil offers a counterpoint, forecasting a positive singularity as early as 2029 if ethical frameworks are prioritized. Meanwhile, libertarian critiques highlight the danger of government overreach, drawing on past regulatory failures to argue that centralized control could crush the diverse thinking needed to address AI risks.

This variety of thought—spanning philosophers, scientists, and even astrophysicists—fuels a rich dialogue on AI’s future. The inclusion of unconventional voices ensures a breadth of ideas, from technical fixes to ethical principles. However, a common thread persists: the fear that uniform regulatory approaches could stifle this creative problem-solving, a concern shared across independent tech circles.

Navigating AI Safety Without Regulatory Overreach

Given the flaws in federal mandates, alternative paths to AI safety merit serious consideration. One approach involves bolstering independent researchers who operate outside governmental constraints, ensuring their work on practical solutions gains traction through public support or crowdfunding. This empowers innovation free from bureaucratic delays.

Another strategy focuses on transparency through open-source AI models, where community auditing can flag risks early. Drawing inspiration from trusted historical figures, such systems could prioritize accountability over profit. Additionally, advocating for coordinated development of superintelligent systems might prevent any single entity from monopolizing control, reducing the odds of catastrophic misalignment.

Public action also plays a role, with campaigns or strikes against reckless tech firms offering a way to enforce accountability. Embedding ethical guidelines, such as principles of non-aggression, into AI programming could further act as a safeguard against harm. These decentralized efforts collectively chart a course that balances progress with precaution, placing power in the hands of communities rather than solely in regulatory bodies.

Reflecting on a Path Forward

Looking back, the discourse around AI regulation revealed a stark tension between protection and peril. The warnings from experts had underscored the gravity of superintelligence, while historical missteps in policy painted a cautionary tale of unintended harm. Diverse voices had enriched the debate, yet the shadow of overreach lingered as a persistent threat.

Moving ahead, actionable steps emerged as vital. Supporting independent safety initiatives had proven a promising start, alongside pushing for transparent, community-driven AI models. Coordinated efforts to prevent monopolistic control had gained traction, as had the idea of embedding ethical constraints into technology itself. These measures offered a blueprint for progress without the risks of heavy-handed governance.

Ultimately, the journey forward hinged on collective vigilance. By prioritizing innovation over restriction and fostering global dialogue, humanity could steer AI toward a beneficial future. The lessons learned demanded a commitment to adaptability, ensuring that the tools of tomorrow served as allies rather than adversaries in the quest for survival.